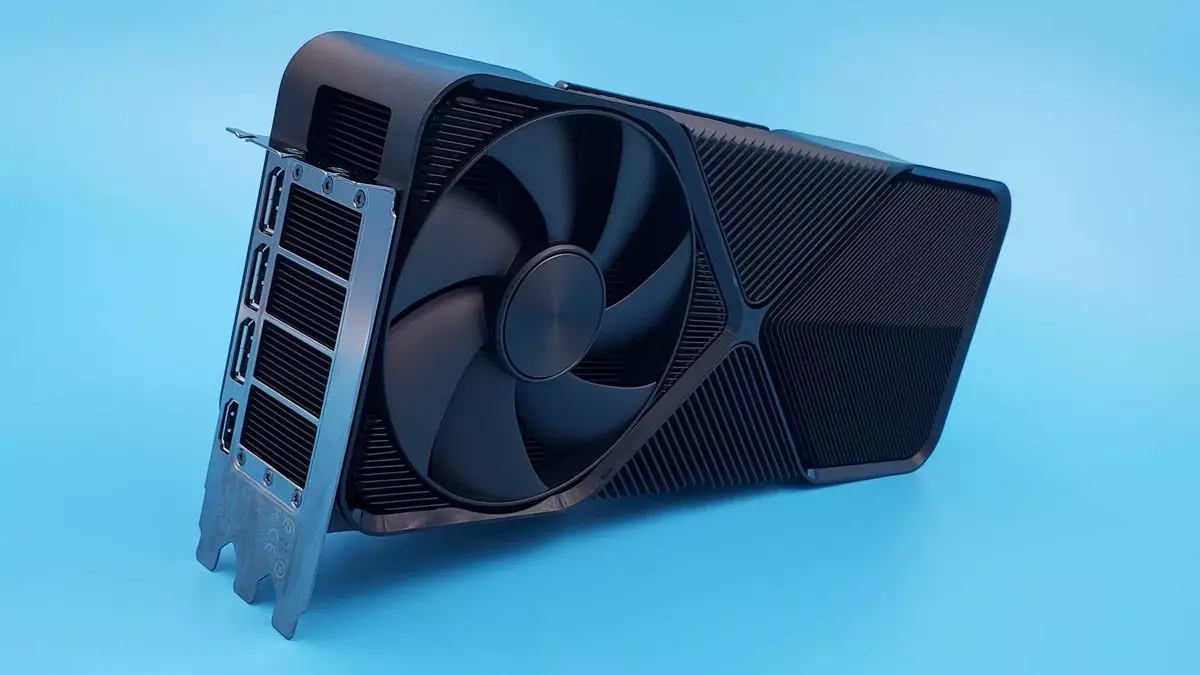

As the technological landscape of gaming evolves at an unprecedented pace, Nvidia stands at the forefront of these digital advancements. With buzz on the horizon regarding the RTX 5090 GPU, exciting speculation arises about innovations that could significantly alter gaming visuals. Central to this conversation is the concept of **neural rendering**, a remarkable frontier aiming to revolutionize the way games are rendered. This article delves into the implications of these developments, particularly with Nvidia’s impending Blackwell architecture expected to be showcased in CES 2025.

Traditionally, game graphics rely on time-honored 3D rendering techniques, utilizing the capabilities of GPUs to produce lifelike environments and characters. However, as gaming demands intensify for more immersive experiences, the gaming industry has begun exploring AI-driven technologies. The primary driving force behind this exploration is the promise of generating graphics through advanced algorithms—potentially shifting away from conventional pipelines toward fully AI-rendered scenes.

Recent leaks from graphics card manufacturer INNO3D hint at this transformative shift set to accompany the launch of Nvidia’s next-generation GPUs. Though it doesn’t explicitly mention the RTX 5090, it bears notable references to Nvidia’s technologies like DLSS (Deep Learning Super Sampling) and ray-tracing cores. An emphasis on improved AI capabilities and a focus on neural rendering point to a future where graphics could be enhanced dynamically through machine learning processes.

To understand neural rendering, it helps to recognize its foundational elements, particularly how the technology processes visual data. Traditional rendering systems can take considerable time and effort; by leveraging machine learning, Nvidia posits that these processes can be optimized significantly. The vision revolves around having a neural network interpret scene data—things like object positioning and environmental variables—and generate images in real-time, streamlining the rendering process to yield graphical fidelity that has yet to be achieved.

Nvidia’s VP of Applied Deep Learning Research, Bryan Catanzaro, has posited that they have preliminarily demonstrated real-time neural rendering, albeit still in its nascent stages. To date, this process hasn’t reached the quality of contemporary gaming experiences, such as those seen in high-octane titles like “Cyberpunk 2077.” Nevertheless, they assert that existing technologies like DLSS are already operating at a potent level where only a fraction of the visual data derives from traditional GPU pipelines.

While the allure of fully AI-rendered graphics captures the imagination, practical implementation may be more nuanced. Pushing for a scenario in which every pixel on screen is generated by AI could face challenges. More likely, Nvidia’s trajectory may involve incrementally integrating AI enhancements into specific parts of the rendering framework without entirely discarding the traditional rendering process. Consider the possibility of introducing real-time neural radiance caching during path tracing, where AI could expedite processes that typically strain resources, enhancing both performance and graphical quality.

Moreover, there exists the risk that “neural rendering” might become a catch-all term for existing enhancements within Nvidia’s oeuvre that utilize AI for incremental improvements—potentially diluting the import of this technology in the eyes of consumers seeking revolutionary changes.

For gamers, the implications of these advancements are profound. Improved graphical fidelity not only enriches the gaming experience but also diminishes the system demands required to achieve high-quality visuals. For developers, an enhanced ability to leverage AI technologies could streamline workflows and open avenues for creative expression. By relying on robust neural networks, developers could realize more complex and vibrant worlds, all while allocating fewer resources to mundane rendering tasks.

As Nvidia gears up for CES 2025 with the unveiling of their upcoming graphics cards, the excitement is palpable. As they aim to better utilize Tensor Cores for AI processes, there’s a sense that Nvidia may finally strike a balance between traditional rendering and next-gen AI capabilities—setting the stage for a new era in gaming graphics.

While we remain on the brink of neural rendering, it’s crucial to approach these developments with a healthy dose of skepticism. Nvidia’s persistent commitment to innovation fuels a promising future for gaming graphics—one that strives to unify traditional rendering techniques with groundbreaking AI technologies. The anticipation of the RTX 5090 heralds a significant turning point in gaming, yet the path to fully realizing this vision will require thorough exploration and careful calibration of technology and consumer expectations. Ultimately, embracing the transformative potential of AI in gaming graphics suggests a future where realism and performance elevate the player experience to unprecedented heights.